I recently had an opportunity to buy an old 1U Dell server for a good price. I thought it would be a neat project to set up FreeBSD on it and get it racked up at a local ISP.

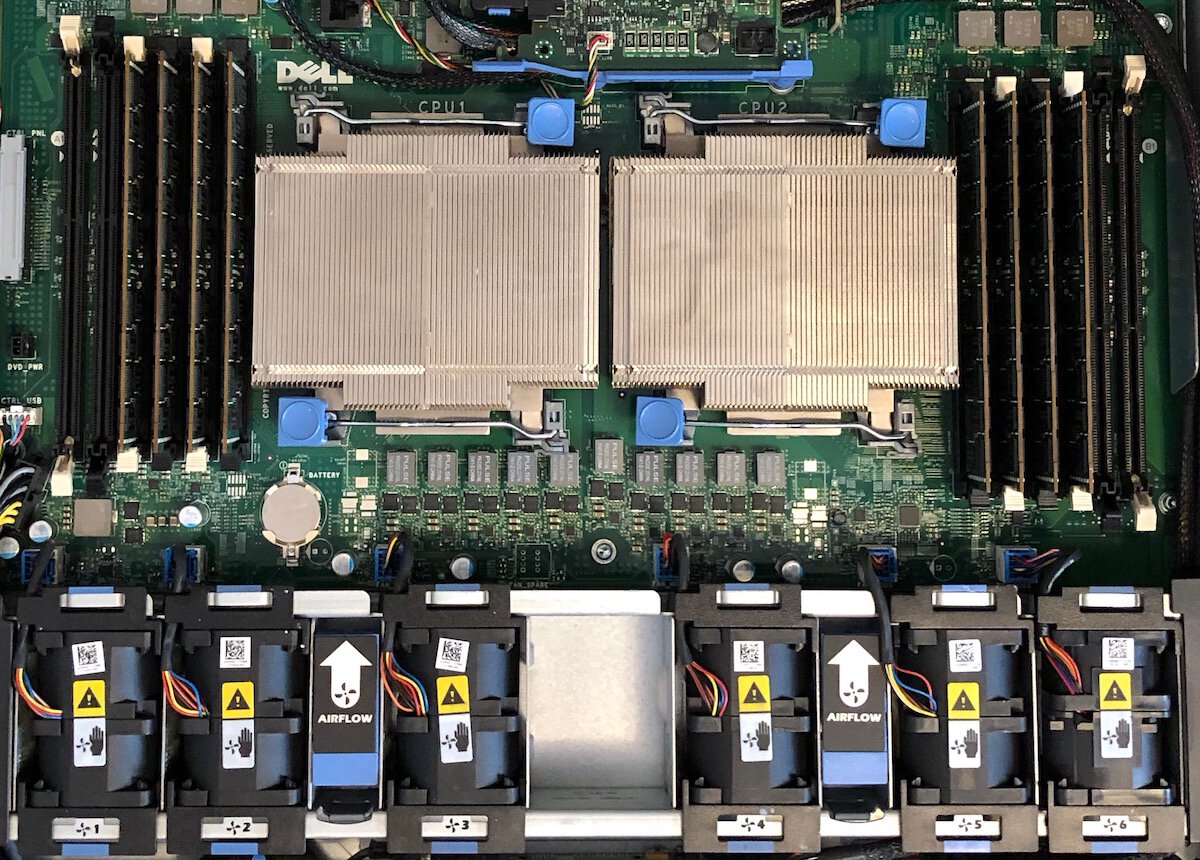

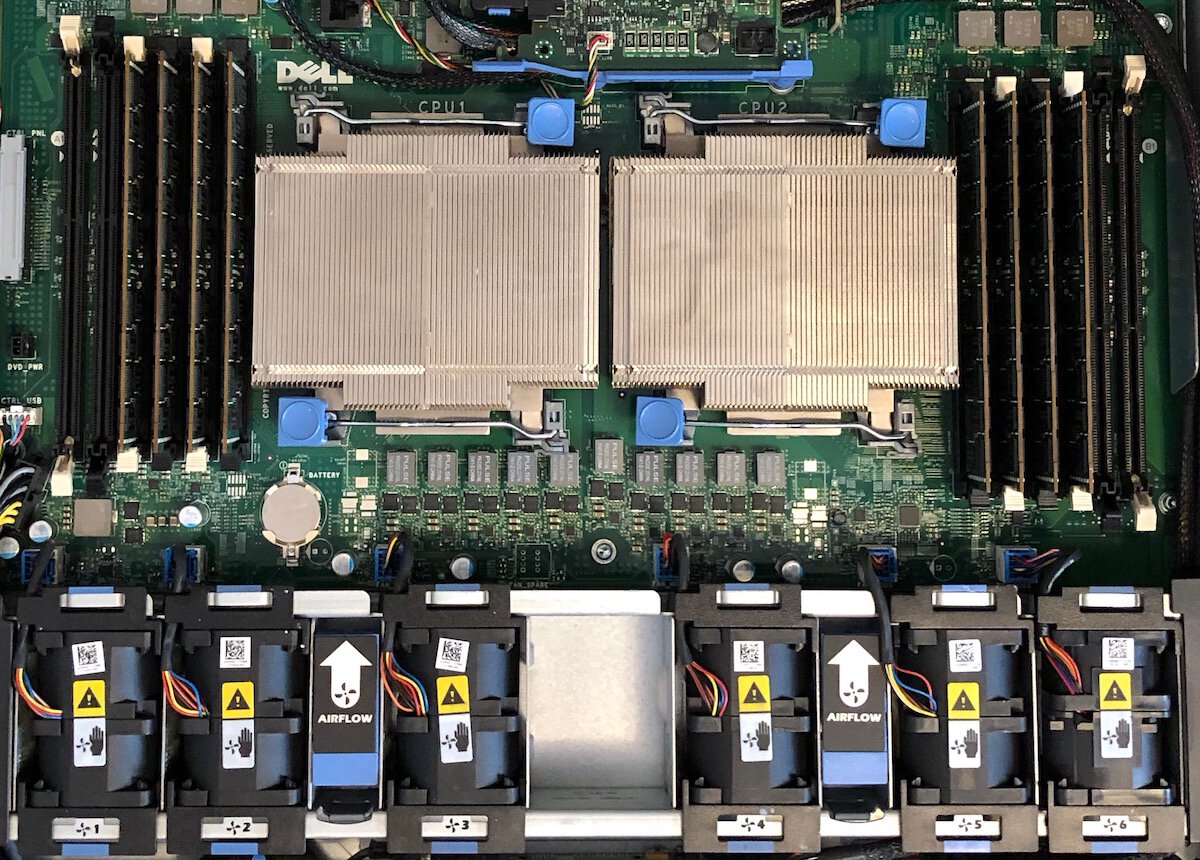

The server was loaded up with a pair of 6-way Xeon processors, 64GB of memory, and six 600GB 10kRPM disks. I had been running OpenBSD on this hardware but ran into a couple of things that made me think maybe I should try out FreeBSD. Looking at the FreeBSD documentation, it looked like it would support the hardware well and be a good fit for my projects.

The server came with a pair of redundant power supplies. My ISP only offers a single outlet in their most modestly priced collocation package, so I picked up an IEC Y-cable. This isn't as good as having each power supply connected to a separate power feed, but I guess it's more likely something in one of the ten-year-old power supplies is going to give up than it is that the rack I'm in will lose power.

Speaking of racks... I had to pick up a pair of rails since the server didn't come with them. Dell made both static and sliding rails for this model. I preferred the static rails since they accommodate a wider range of rack configurations and I didn't anticipate much need for working on the server while it was plugged in. The static rails seem to be less common on the used market, but I was eventually able to source a pair for a good price.

The R610 supports Advanced ECC, but it requires that the memory be connected in a particular layout and it reduces the maximum number of DIMMs that can be installed from twelve to eight. Happily, the 64GB of memory that came with the server was already layed out as eight 8GB DIMMS, I just had re-arrange what slots they were in.

Since the system was already nearly ten years old, I took the opportunity while I had it open to replace the primary lithium cell on the motherboard. The storage controller also has a rechargeable lithium pack for its write-back cache, but its health was reported as good and visual inspection didn't indicate any issues. I left it for now.

The server came with the enterprise add-on card for the iDRAC. I didn't plan to use the features it provided and — frankly — the DRAC felt a little buggy when I was setting it up and removing this card seemed to help so I left it out.

One of my projects would really benefit from disk I/O throughput and low latency. I scored a good deal on an old Intel NVMe card — a DC P3600 1.6TB model. It's advertised as having a sequential read speed of 2,600MB/s but it's meant to be installed in a PCIe Gen3 X4 slot. This old server has only Gen2 slots, so I expect it will be limited 2,000MB/s since Gen2 lanes can each carry 500MB/s.

After replacing the motherboard battery, I had to set the time and date in the system setup menu (F2 during boot). While I was in there, I also confirmed that Advanced ECC was enabled and I set up serial console redirection (9,600 baud, VT220 terminal, redirection disabled after POST). I didn't plan on booting over the network, so I disabled the NIC in the boot order menu. I disabled the memory test as well, along with the built-in SATA controller (no optical drive and I'd be using the RAID controller for the disks).

Next, I popped into the storage controller's setup menu (ctrl-R during boot). The controller came with a pair of disks configured in RAID-1 and the other four configured in RAID-5. I cleared the controller configuration and created a new virtual disk with RAID-10 across the first four disks. I selected a 64k stripe size, enabled adaptive read-ahead, and enabled write-back (I'm not really sure about what the workload will be, but this seemed like an alright place to start). I added the remaining two disks as hot spares and initialized the virtual disk.

My next visit was to the DRAC's configuration menu (ctrl-E at boot). I didn't have the password for the DRAC, so I performed a full reset to defaults from the configuration menu and powered the system back down. I used the server's front panel to set the DRAC IP address. Pretty soon it came up on my network. With the enterprise card removed, I found it was listening on TCP ports 22 (SSH), 80 (presumably just to redirect to 443), and 443 (HTTPS) as well as UDP 623 (remote RACADM, I guess). I ssh'd in using the default user name and password so I could disable the web server and remote RACADM, change the default user name and password, change the SSH port, set the serial-over-LAN baud rate, and reset the DRAC.

racadm config -g cfgractuning -o cfgractunewebserverenable 0 racadm config -g cfgractuning -o cfgractuneremoteracadmenable 0 racadm config -g cfguseradmin -i 2 -o cfguseradminusername [user name] racadm config -g cfguseradmin -i 2 -o cfguseradminpassword [password] racadm config -g cfgractuning -o cfgractunesshport [port] racadm config -g cfgipmisol -o cfgipmisolbaudrate 9600 racadm racreset

The online help and DRAC user's guide were very helpful in performing these tasks. I made a note of some other useful commands while I was in there.

console com2 — open session on serial port, ^\ to close racadm serveraction powerup — power on the system racadm serveraction powerdown — power off the system racadm closessn -a — close all sessions other than the current one

I used dd on my iMac to put

FreeBSD-13.0-RELEASE-amd64-memstick.img on a little USB stick.

dd if=FreeBSD-13.0-RELEASE-amd64-memstick.img of=/dev/disk4 bs=512

Before using this stick to boot the server, I booted it on a ThinkPad X220

laptop and used the laptop to drop into a shell and update

/boot/loader.conf to use a serial console. I didn't have to adjust

the speed since 9,600 Baud is the default and I had already configured the DRAC

and BIOS to use that speed.

mount -o rw /dev/ufs/FreeBSD_Install / echo boot_multicons=“\YES\” >> /boot/loader.conf echo boot_serial=\“YES\” >> /boot/loader.conf echo console=\“comconsole,vidconsole\” >> /boot/loader.conf

Since I was performing the installation remotely, I read the image back off the memory stick, transferred it to the server, and transferred it to a stick which was installed in the server's internal USB port.

With the server ready to go, I ssh'd to the DRAC again and ran racadm

serveraction powerup to start the system and console com2

to connect to the serial console and watch the boot process. I used the boot

manager (F11 at boot, esc-! through the DRAC) to select the internal USB port

as the boot source.

At the loader menu, I selected Boot Multi user. Dropped out to a shell at the welcome screen to check that the network and storage drivers loaded and see what the device names are. I checked the health of the PERC battery and also checked its settings.

root@lucy:~ # mfiutil show battery

mfi0: Battery State:

Manufacture Date: 3/27/2009

Serial Number: 2884

Manufacturer: SANYO

Model: DLNU209

Chemistry: LION

Design Capacity: 1700 mAh

Full Charge Capacity: 637 mAh

Current Capacity: 612 mAh

Charge Cycles: 47

Current Charge: 96%

Design Voltage: 3700 mV

Current Voltage: 3997 mV

Temperature: 31 C

Autolearn period: 90 days

Next learn time: Wed Mar 9 00:27:47 2022

Learn delay interval: 0 hours

Autolearn mode: enabled

Status: normal

State of Health: good

The manual says a new battery can keep the 256 MB cache memory refreshed for 72 hours, so I guess that's an average current of about 24 mA. The present battery capacity should still give over 24 hours, which I think is the replacement threshold. That said, I'll plan to replace the battery sooner than later.

I was surprised by how the cache settings were configured and updated them.

I'm not sure if all of these settings were surfaced in the BIOS. It was very

convenient to be able to check and update them with mfiutil.

root@lucy:~ # mfiutil cache mfid0

mfi0 volume mfid0 cache settings:

I/O caching: disabled

write caching: write-back

write cache with bad BBU: enabled

read ahead: adaptive

drive write cache: default

root@lucy:~ # mfiutil cache mfid0 enable

root@lucy:~ # mfiutil cache mfid0 write-back

root@lucy:~ # mfiutil cache mfid0 read-ahead adaptive

root@lucy:~ # mfiutil cache mfid0 bad-bbu-write-cache disable

root@lucy:~ # mfiutil cache mfid0 write-cache disable

root@lucy:~ # mfiutil cache mfid0

mfi0 volume mfid0 cache settings:

I/O caching: writes and reads

write caching: write-back

write cache with bad BBU: disabled

read ahead: adaptive

drive write cache: disabled

While I was checking out the storage, I used nvmecontrol to

check the remaining write endurance on the Intel SSD. To my pleasant surprise,

it was almost brand new. It looks like it was powered on just short of six

years, but has only had about 1.7 TB written to it in that lifetime. I expect

my workload to be read-heavy so it should last a very long time for me.

Available spare: 100 Available spare threshold: 10 Percentage used: 3 Data units (512,000 byte) read: 3738344 Data units written: 3474372 Host read commands: 85183450734 Host write commands: 129672361671 Controller busy time (minutes): 1768 Power cycles: 49 Power on hours: 50656 Unsafe shutdowns: 27

I also did some basic performance tests on the storage devices (I was switching from OpenBSD to FreeBSD largely in hopes of seeing a performance improvement here).

For mfid0, a PERC 6/i with four 600 GB 10,000 RPM SAS drives in RAID10:

Transfer rates: outside: 102400 kbytes in 0.567512 sec = 180437 kbytes/sec middle: 102400 kbytes in 0.609079 sec = 168123 kbytes/sec inside: 102400 kbytes in 0.702063 sec = 145856 kbytes/sec Asynchronous random reads: sectorsize: 3703 ops in 3.161924 sec = 1171 IOPS 4 kbytes: 3433 ops in 3.220038 sec = 1066 IOPS 32 kbytes: 3318 ops in 3.178585 sec = 1044 IOPS 128 kbytes: 1688 ops in 3.206632 sec = 526 IOPS 1024 kbytes: 601 ops in 3.816521 sec = 157 IOPS

For nvme0, an Intel SSDPEDME016T4S:

Transfer rates: outside: 102400 kbytes in 0.086459 sec = 1184376 kbytes/sec middle: 102400 kbytes in 0.086363 sec = 1185693 kbytes/sec inside: 102400 kbytes in 0.089994 sec = 1137854 kbytes/sec Asynchronous random reads: sectorsize: 1100022 ops in 3.000129 sec = 366658 IOPS 4 kbytes: 1053922 ops in 3.000183 sec = 351286 IOPS 32 kbytes: 156498 ops in 3.002404 sec = 52124 IOPS 128 kbytes: diskinfo: aio_read: Operation not supported

It looked like this performance would be suitable for the projects I'm working on. I exited the shell and continued with the installation.

I accepted the defaults (kernel-dbg and lib32) at the Distribution Select screen. I chose Auto UFS at the Partitioning screen; I'd like to try out ZFS at some point, but I'll probably get to know it somewhere other than a production server first. I had it use the entire mfid0 disk (the RAID10 array) and selected GUID Partition Table as the Partition Scheme. I accepted the recommended layout:

mfid0 mfid0p1 512 KB freebsd-boot mfid0p2 1.1 TB freebsd-ufs / mfid0p3 3.7 GB freebsd-swap none

After installation was complete, I set a root password. Then I selected bce0 for network configuration. I configured a static IP address and default router. The link came right up. Sharing the network interface with the DRAC doesn't seem to be any trouble with the FreeBSD driver (on OpenBSD only one or the other could use it at once). I configured DNS. I set the clock to use UTC since there's no need to share the hardware clock with another operating system. For the timezone, I selected Eastern - MI (most areas). I confirmed that the date and time looked right.

At the System Configuration screen, in addition to the defaults (sshd and dumpdev) I also selected ntpd to keep the system clock automatically synchronized.

At the System Hardening screen, after a little reading, I selected hide_uids, hide_gids, hide_jail, read_msgbuf, random_pid, clear_tmp, disable_syslogd, and disable_ddtrace. I think those will end up being good choices for my use case, but I'm sure they're easy enough to change if not.

I added a user and included them in the wheel group.

At the Final Configuration screen, I chose Exit. At the Manual Configuration

screen I chose to jump out to a shell so I could set the newly installed system

up to use the serial console. I created /boot.config with only

the -D option, to have the second stage boot loader use a dual

console configuration. I added the same lines to /boot/loader.conf as I had to

the installation media to have the loader and kernel also use the serial

console.

I exited the shell and let the server reboot. It came up fine on the serial console as well as on the network. I logged in via SSH to do the rest of my work.

I wanted to configure the empty space on the USB stick so I could use it.

I resized the second (freebsd) slice on the stick to take the whole space then

added a d partition to use the unused space and created a new

filesystem on it. I created a directory to mount it on, added an entry to

fstab, and mounted the new filesystem.

root@lucy:~ # gpart resize -i 2 da0 root@lucy:~ # gpart add -t freebsd-ufs -i 4 da0s2 root@lucy:~ # newfs -L backup da0s2d root@lucy:~ # mkdir /backup root@lucy:~ # echo /dev/ufs/backup /backup ufs rw 0 2 >> /etc/fstab root@lucy:~ # mount /backup

I added a couple of lines to root's crontab to back up the etc

directories to this partition once a week. Just In Case.

I added my public SSH keys to ~user/.ssh/authorized_keys, then I updated

/etc/ssh/sshd_config to allow only public key authentication by

uncommenting the PasswordAuthentication no line and setting

ChallengeResponseAuthentication no. I also uncommented

PermitRootLogin no to prevent root from logging in directly.

I reloaded the configuration with service sshd reload.

Then I confirmed that I wasn't able to log in as root or use password authentication in my ssh client.

By default, /etc/defaults/rc.conf sets up Sendmail for local

delivery (and outbound delivery, but I blocked that with the firewall).

I wanted mail for root to be delivered to my new user, so I added a

root: user line to /etc/aliases and ran newaliases.

By default, periodic sends a lot more email than I'd prefer.

I created /etc/periodic.conf and added some some lines to turn

off reporting I wasn't interested in (and turn on a couple of things I

was interested in):

daily_show_success="NO" daily_status_disks_enable="NO" daily_status_network_enable="NO" daily_status_uptime_enable="NO" daily_status_ntpd_enable="YES" weekly_show_success="NO" weekly_noid_enable="YES" monthly_show_success="NO" monthly_accounting_enable="NO" security_show_success="NO" security_status_loginfail_enable="NO"

Since I don't visit the datacenter this server is in very often, I figured it would be good to be able to monitor the health of the disk subsystem remotely. I figured for now I'd be happy to just see some vital statistics when I log in from time to time.

Aside from providing commands to configure the storage controller,

mfiutil can get all kinds of information about how the controller

and its attached disks are doing. As you might imagine, this utility must be

run by root. But I wanted to see the status when I logged in as a regular user.

I made a little script in root's home directory to get the bits of information

I was most interested in.

#!/bin/sh date=$(date) echo Disk status as of $date mfiutil show volumes | sed '1,2d' mfiutil show drives | sed '1d' bat_status=$(mfiutil show battery | grep '^\s*Status' | cut -d: -f2) bat_health=$(mfiutil show battery | grep '^\s*State of Health' | cut -d: -f2) echo " Battery $bat_status, $bat_health"

I then added an entry to root's crontab to run this program daily and send the output to a file in the user's home directory.

@daily /root/diskstatus > /home/user/diskstatus

In the user's .profile, I commented out the line that runs

fortune and added a line instead to cat ~/diskstatus.

As root, I also ran cat /dev/null > /etc/motd.template to clear

out the login message for new installations.

Now when I log in, I get output like

Disk status as of Mon Dec 27 16:03:17 EST 2021 mfid0 ( 1117G) RAID-10 64K OPTIMAL Disabled 0 ( 559G) ONLINE <TOSHIBA MBF2600RC DA07 serial=EA03PB904GDP> SCSI-6 E1:S0 1 ( 559G) ONLINE <TOSHIBA MBF2600RC DA07 serial=EA03PBC06MTJ> SCSI-6 E1:S1 2 ( 559G) ONLINE <TOSHIBA AL13SEB600 DE0D serial=Y4K0A0GNFRD3> SCSI-6 E1:S2 3 ( 559G) ONLINE <TOSHIBA AL13SEB600 DE09 serial=X4I0A02EFRD3> SCSI-6 E1:S3 4 ( 559G) HOT SPARE <TOSHIBA AL13SEB600 DE0D serial=Y4K0A0MDFRD3> SCSI-6 E1:S4 5 ( 559G) HOT SPARE <SEAGATE ST9600205SS CS05 serial=6XR1SM33> SAS E1:S5 Battery CHARGING, good

I thought I'd like to set up the pf firewall. FreeBSD has a

few different firewall options, but I was already familiar with pdf

from using OpenBSD and it seems like it's a pretty popular and well-supported

option on FreeBSD too. I made a new /etc/pf.conf file. I set it up to leave

alone the loopback interface, to block new connections by default, but to

allow new outgoing connections (except SMTP), and to allow incoming ssh

connections as well as incoming ICMP. I can add additional ports to this rule

in the future to allow selected other types of incoming connections (http and

https come to mind). I turned on fragment reassembly because I think it's

needed to do stateful filtering on fragmented packets.

set block-policy return

set skip on lo0

scrub in fragment reassemble

block

pass out

block out proto tcp to any port smtp

pass in proto tcp to any port { ssh }

pass in proto icmp

After checking the new file for errors, I enabled pf and started it.

root@vultr1:~ # pfctl -f /etc/pf.conf -n root@vultr1:~ # sysrc pf_enable=yes pf_enable: NO -> yes root@vultr1:~ # service pf start

I added a security_status_pfdenied_enable="NO" line to

/etc/periodic.conf since I wasn't interested in getting emails about denied

connections.

I hope that you found this helpful. If this is the kind of thing you're into, you may enjoy some of my other articles. If you have any questions or comments, please feel free to drop me an e-mail.

Aaron D. Parks